Structure Inference Machines: Recurrent Neural Networks for Analyzing Relations in Group Activity Recognition.

Basic understanding

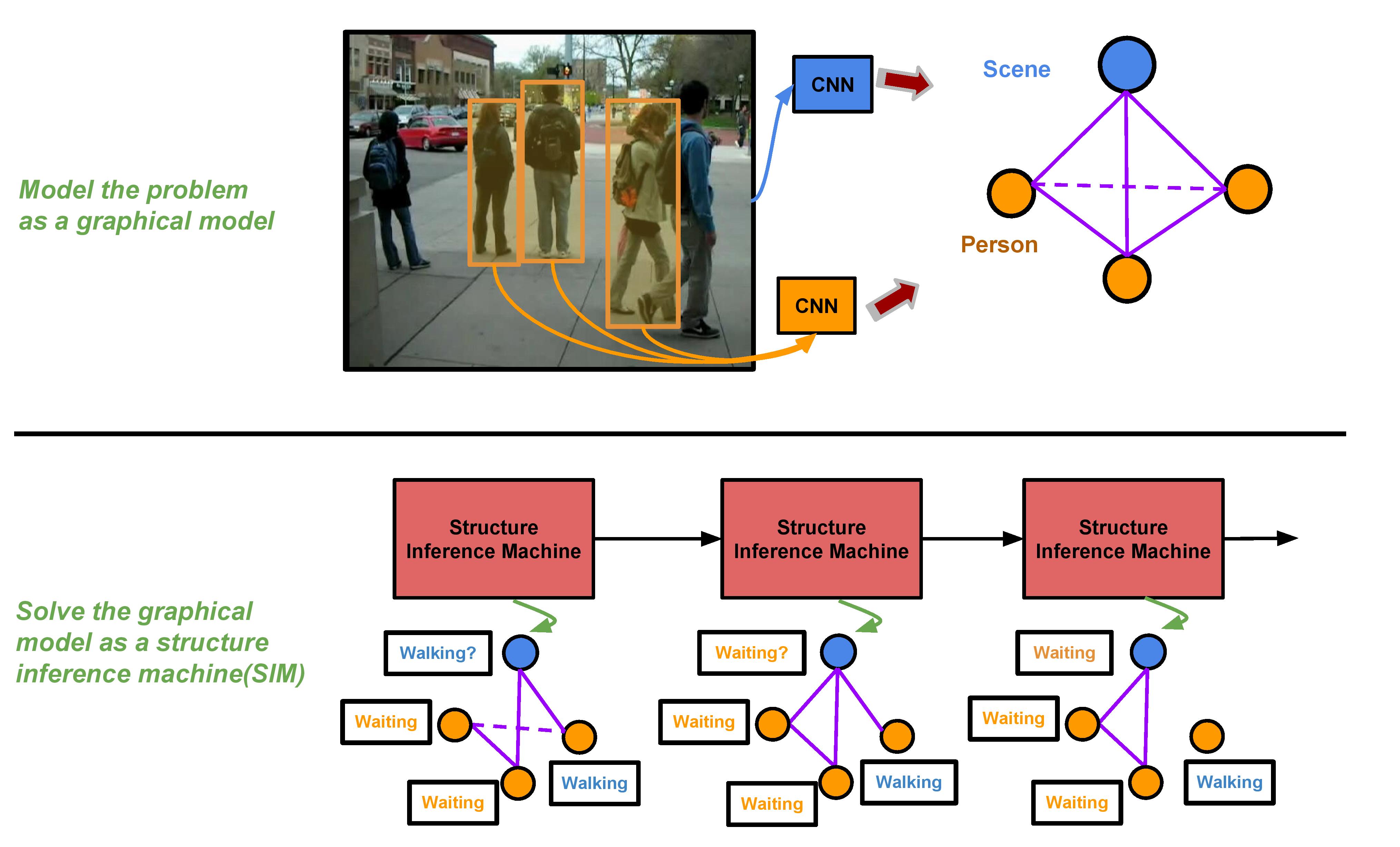

The structure inference machine(SIM) model proposed is a very general framework for understanding graph structures in data and relations between entities. Here we try to give some basic intuitions about the model.In various applications, tasks can be modeled as a graph structure(MRF, CRF, HMM, etc.) with relations among them, such as pose estimation, human activity understanding, human-object affordance, segmentation, etc. Pairwise relations are most common type of interactions built in it. Recently it has arised as an issue that how to embed a graph into the deep learning framework, and this is what SIM solves firstly. A natural issue in a graph modeling is how to connect nodes, especially in a large graph, since most connections are just redundant. Here our gating function in the SIM is used to further put uncertanty on the connections, it turns out that this can be naturally fit into the RNN model and derived as a special type of LSTM.

To be more precise, the SIM represents the factor functions in a PGM as a multi-layer neural network in an RNN. And the recurrent property of RNN naturally corresponds to the shared factors in PGM. The gates in SIM are scalar values in interval [0, 1] put on the edges of the graph and are used to threshold the connections. To be noted that the gates can also be designed as a normal "vector gate" as in other GRU, LSTM; then in that way it's used to modulate the information propagation in the elementwise level.

A picture of the SIM model is shown below. This kind of model is also known as "iterative refinement model".

From attention model's angle

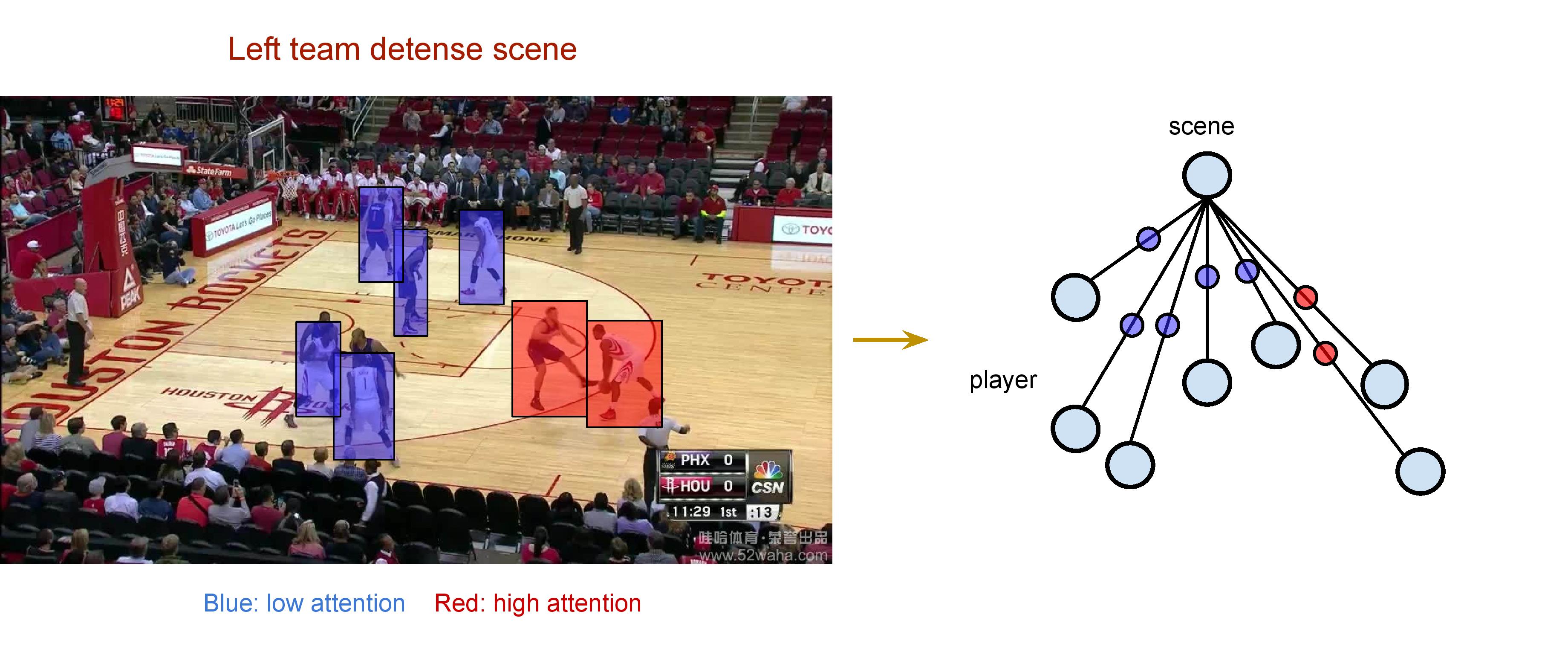

The gates in the structure inference machine model can be completely understood as applying attention mechanism in the graphical model. For a more intuitive modeling explanation, we here made up an example by using a basketball picture because in sports videos it often has more fierce interactions than everyday human activity videos and is easier to explain on.The following picture shows an application that aims at classifying the frame level scene. In the recent paper[1] it proposes that identifying "key actors" is important to sports video scene understanding. They uses the attention method to build the model and achieved great performance. The picture below shows a "SIM" way to understand this attention model.

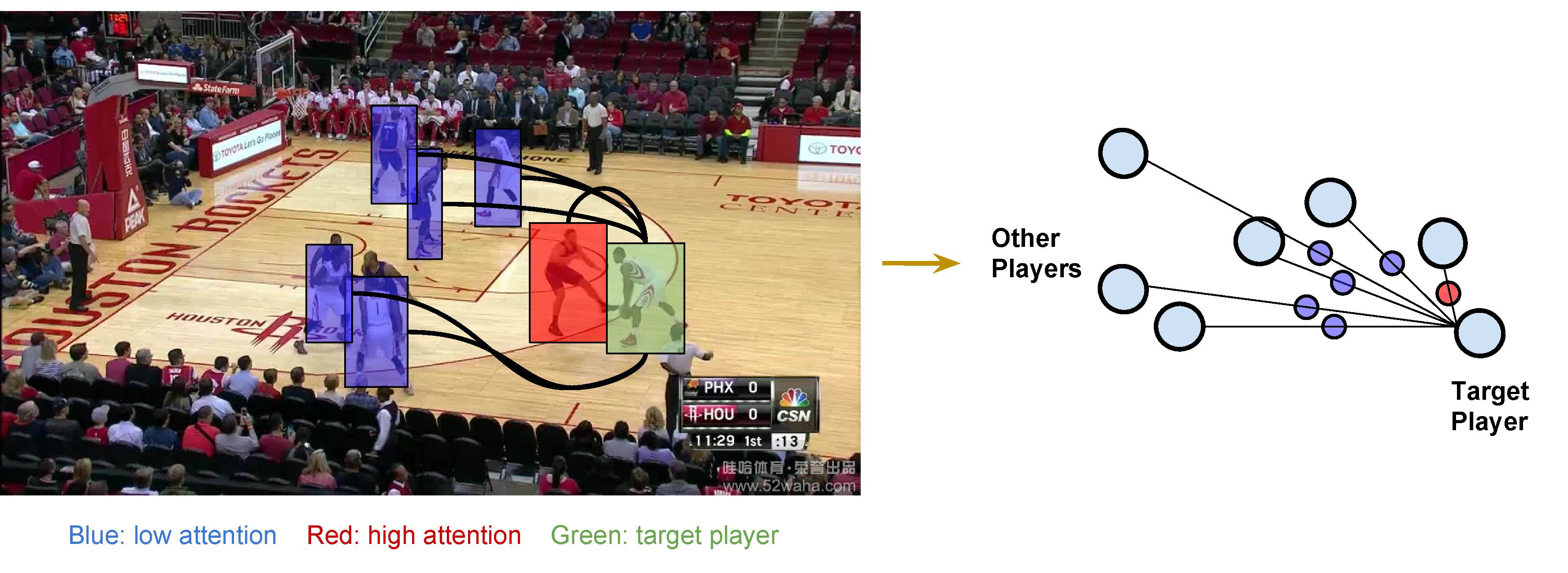

Of course, to generalize the idea, player's fierce interactions in sports can also be modeled as the attentions of the target player to other players. This also is just a part of the graph problem that SIM solves. There could be some potential application arising from this part of graph. For example one could analyze/track the dynamic attention of a player to see how he is interacting with other teamates, or anticipate who the current player will pass the ball to, etc.

From structure learning's angle

The model can use gates to perform structure learning. The low confidence gates will tend to close and cut the connection while high confidence gates will keep the connections. Here the gates are all soft gates. Along with the inference of the graph structure, the model will converge to a local minimum. If with temporal information added, the SIM model could potentially generate a dynamic sparse graph structure for each time point, which could be useful for analyzing dynamics of entity interactions in the time axis.Relevant works

[1]. Ramanathan, Vignesh, et al. "Detecting events and key actors in multi-person videos." arXiv preprint arXiv:1511.02917 (2015).[2]. Liang, X., Shen, X., Feng, J., Lin, L., and Yan, S. (2016). Semantic Object Parsing with Graph LSTM. arXiv preprint arXiv:1603.07063.

[3]. Carreira, J., Agrawal, P., Fragkiadaki, K., and Malik, J. (2015). Human pose estimation with iterative error feedback. arXiv preprint arXiv:1507.06550.